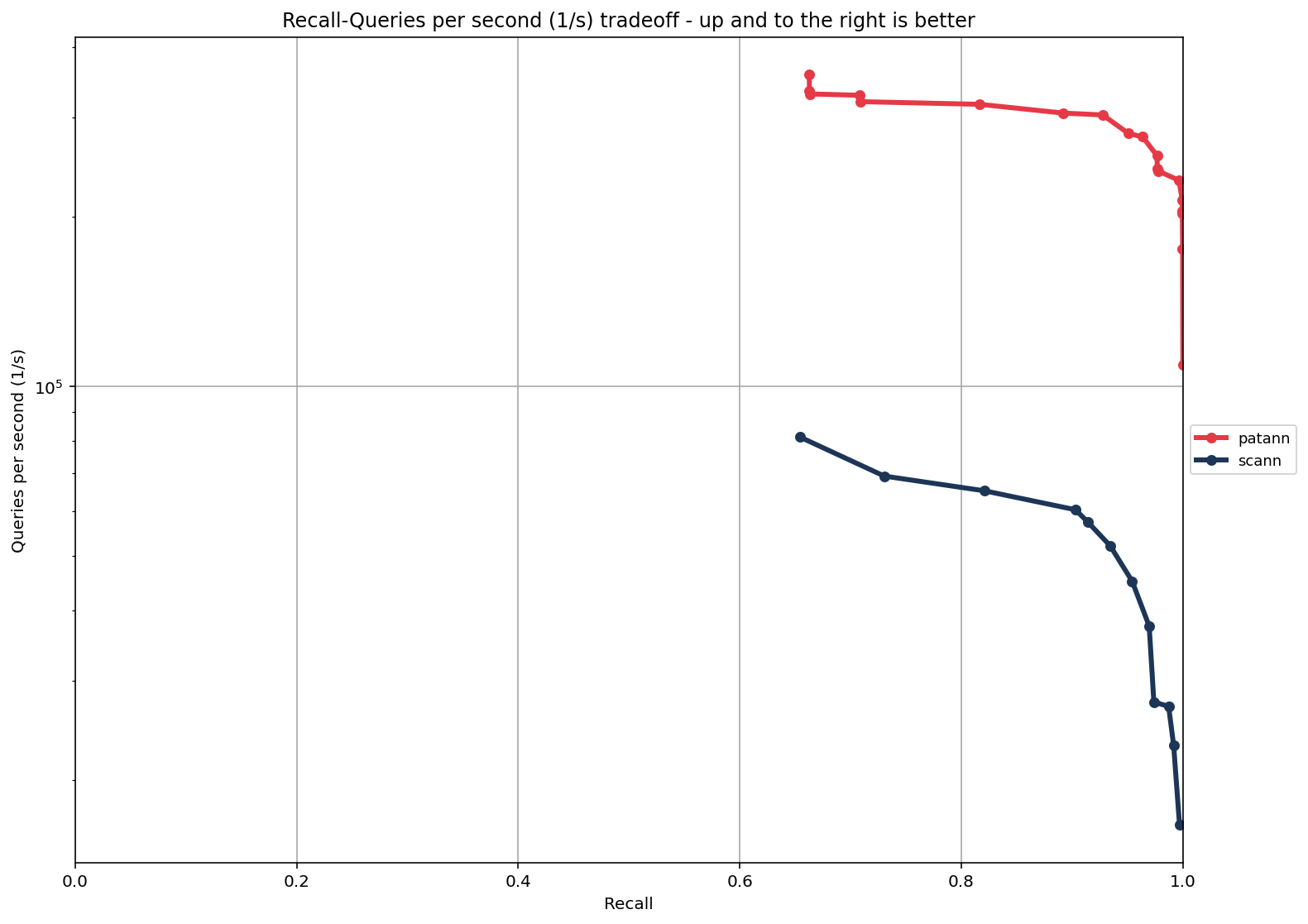

Comparison with Google ScaNN #

ScaNN #

ScaNN (Scalable Nearest Neighbors) is Google’s state-of-the-art vector similarity search library that combines quantization, dimensionality reduction, and efficient search algorithms to enable fast and accurate approximate nearest neighbor search. Below we compare PatANN and ScaNN across various datasets and metrics.

Query Time vs. Recall@10 Comparison #

Detailed Comparison (SIFT1M, k=10) #

Performance Metrics #

| Library | Geometric Mean QPS | Median QPS | QPS@95% | Weighted Avg QPS | AUC Value | AUC Normalized |

|---|---|---|---|---|---|---|

| PatANN | 182,526.33 | 190,849.94 | 357,320.88 | 223,090.29 | 83,919.87 | 223,090.29 |

| ScaNN | 40,818.24 | 45,086.66 | 81,244.97 | 57,529.09 | 29,110.29 | 57,529.09 |

Recall at Different QPS Levels #

| Library | Recall@10,000 QPS | Recall@50,000 QPS | Recall@100,000 QPS | Recall@200,000 QPS |

|---|---|---|---|---|

| PatANN | 0.99991 | 0.99991 | 0.99991 | 0.99944 |

| ScaNN | 0.99691 | 0.93428 | - | - |

Algorithm Parameters #

| Library | Points | Min K | Max K | K Range | Min Epsilon | Max Epsilon | Median Epsilon |

|---|---|---|---|---|---|---|---|

| PatANN | 280 | 0.62374 | 0.99991 | 0.37617 | 0.72420 | 1 | 0.99718 |

| ScaNN | 33 | 0.49090 | 0.99691 | 0.50601 | 0.56457 | 0.99977 | 0.97712 |

Key Findings #

PatANN outperforms ScaNN by a significant margin, with 4.5x higher geometric mean QPS and 3.9x higher weighted average QPS. While ScaNN maintains excellent recall (99.7%) at 10,000 QPS and good recall (93.4%) at 50,000 QPS, it cannot maintain performance at higher QPS levels beyond 50,000, while PatANN continues to deliver consistent recall rates (>99.9%) even at 200,000 QPS.

ScaNN uses anisotropic quantization and a combination of partitioning and quantization to achieve its performance, making it more effective than traditional methods. However, PatANN’s pattern-aware approach demonstrates superior scalability and consistently higher recall rates across all tested throughput levels. ScaNN shows promising results with only 33 data points compared to PatANN’s 280, suggesting it may be more parameter-efficient but less capable of achieving the highest performance levels.