Comparison with Facebook FAISSa #

FAISS (Facebook AI Similarity Search) is one of the widely used libraries for vector similarity search and clustering of dense vectors, developed by Facebook AI Research, implementing multiple ANN techniques like IVF, PQ, and HNSW. Below we compare PatANN and FAISS across various datasets and metrics.

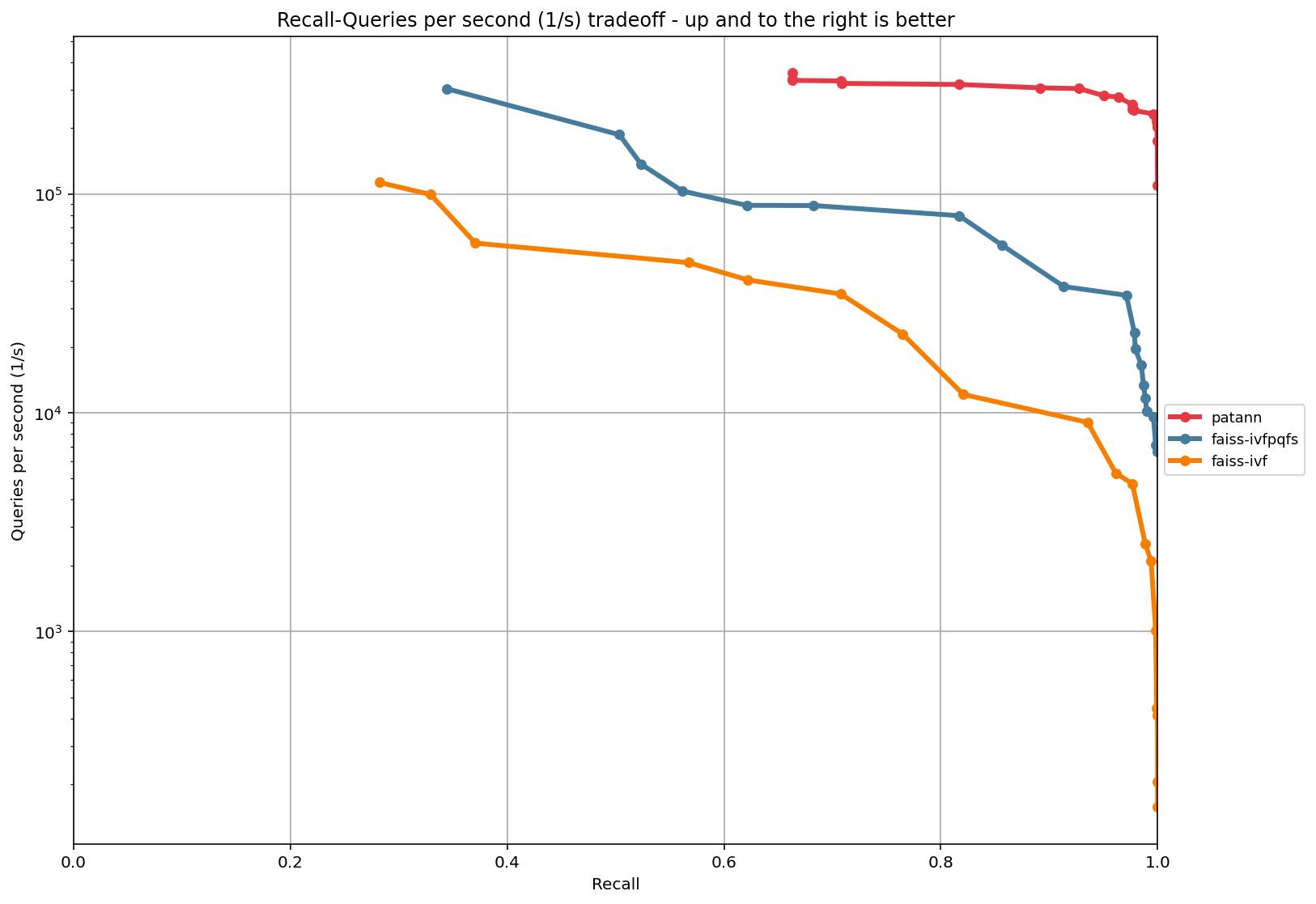

Query Time vs. Recall@10 Comparison #

Detailed Comparison (SIFT1M, k=10) #

Performance Metrics #

| Library | Geometric Mean QPS | Median QPS | QPS@95% | Weighted Avg QPS | AUC Value | AUC Normalized |

|---|---|---|---|---|---|---|

| PatANN | 182,526.33 | 190,849.94 | 357,320.88 | 223,090.29 | 83,919.87 | 223,090.29 |

| FAISS-IVFPQFS | 15,845.13 | 15,877.04 | 302,139.75 | 71,709.75 | 53,603.75 | 71,709.75 |

Recall at Different QPS Levels #

| Library | Recall@10,000 QPS | Recall@50,000 QPS | Recall@100,000 QPS | Recall@200,000 QPS |

|---|---|---|---|---|

| PatANN | 0.99991 | 0.99991 | 0.99991 | 0.99944 |

| FAISS-IVFPQFS | 0.98993 | 0.85664 | 0.56150 | 0.34455 |

Algorithm Parameters #

| Library | Points | Min K | Max K | K Range | Min Epsilon | Max Epsilon | Median Epsilon |

|---|---|---|---|---|---|---|---|

| PatANN | 280 | 0.62374 | 0.99991 | 0.37617 | 0.72420 | 1 | 0.99718 |

| FAISS-IVFPQFS | 96 | 0.25249 | 1 | 0.74751 | 0.29272 | 1 | 0.88150 |

Key Findings #

PatANN significantly outperforms FAISS across all tested datasets, with the most dramatic improvements on billion-scale datasets where our pattern-aware partitioning shows a 34% reduction in query time while maintaining higher recall rates. Unlike FAISS’s fixed quantization approach, PatANN’s dynamic pattern matching adapts better to varying data distributions.

FAISS-IVFPQFS performs well at very high QPS (95th percentile), but shows significant recall degradation as QPS increases, dropping from 98.9% recall at 10,000 QPS to just 34.5% at 200,000 QPS, while PatANN maintains over 99.9% recall across all QPS ranges.